What is Llama 4?

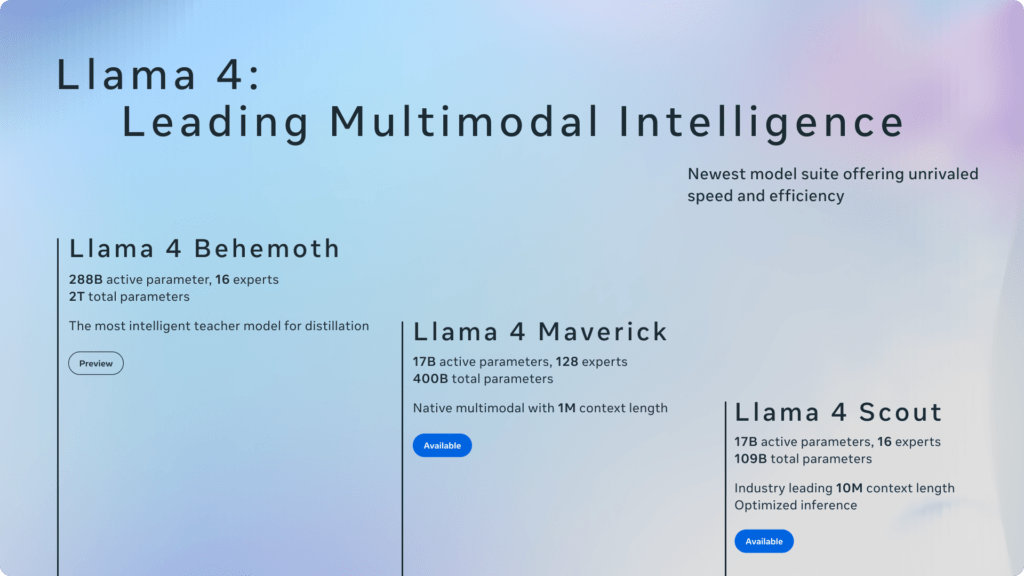

Meta has released three core variants of Llama 4:

- Llama 4 Scout

A compact, single-GPU model ideal for developers and startups.

- Llama 4 Maverick

A high-performance model for multimodal enterprise use cases.

- Llama 4 Behemoth (Preview)

A STEM-specialized model designed for advanced research and training other models.

Source: Meta AI

What Makes Llama 4 Different from Previous Models?

1. Adoption of Mixture-of-Experts (MoE) Architecture

- Llama 4 Scout

Features 16 experts with 17 billion active parameters and a total of 109 billion parameters. Its design allows it to operate efficiently on a single NVIDIA H100 GPU.

- Llama 4 Maverick

Incorporates 128 experts, maintaining 17 billion active parameters but expanding to a total of 400 billion parameters, catering to more complex tasks while still being deployable on a single H100 GPU.

2. Expanded Context Window

- Llama 4 Scout

Supports up to 10 million tokens, a substantial leap from the 128,000 tokens in Llama 3, facilitating tasks like analyzing extensive documents or large codebases.

- Llama 4 Maverick

Offers a 1 million-token context window, enhancing its capability in maintaining coherent and contextually rich interactions over extended conversations.

3. Native Multimodal Capabilities

-

Visual question answering

-

Image-based recommendations

-

Graph and chart interpretation

-

Multi-image summarization

4. Enhanced Multilingual Support

- Trained on over 200 languages, with more than 100 languages having over 1 billion tokens each, ensuring robust performance across a diverse linguistic landscape.

- Provides fine-tuned support for 12 core languages, including Arabic, English, French, German, Hindi, Indonesian, Italian, Portuguese, Spanish, Tagalog, Thai, and Vietnamese, enhancing its utility in global applications.

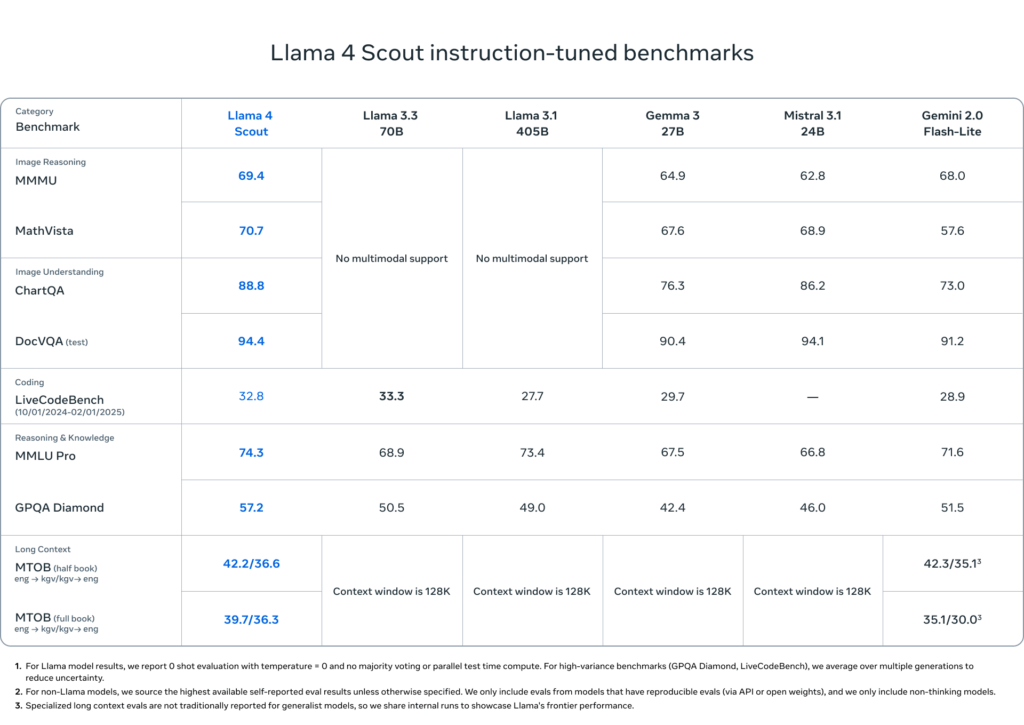

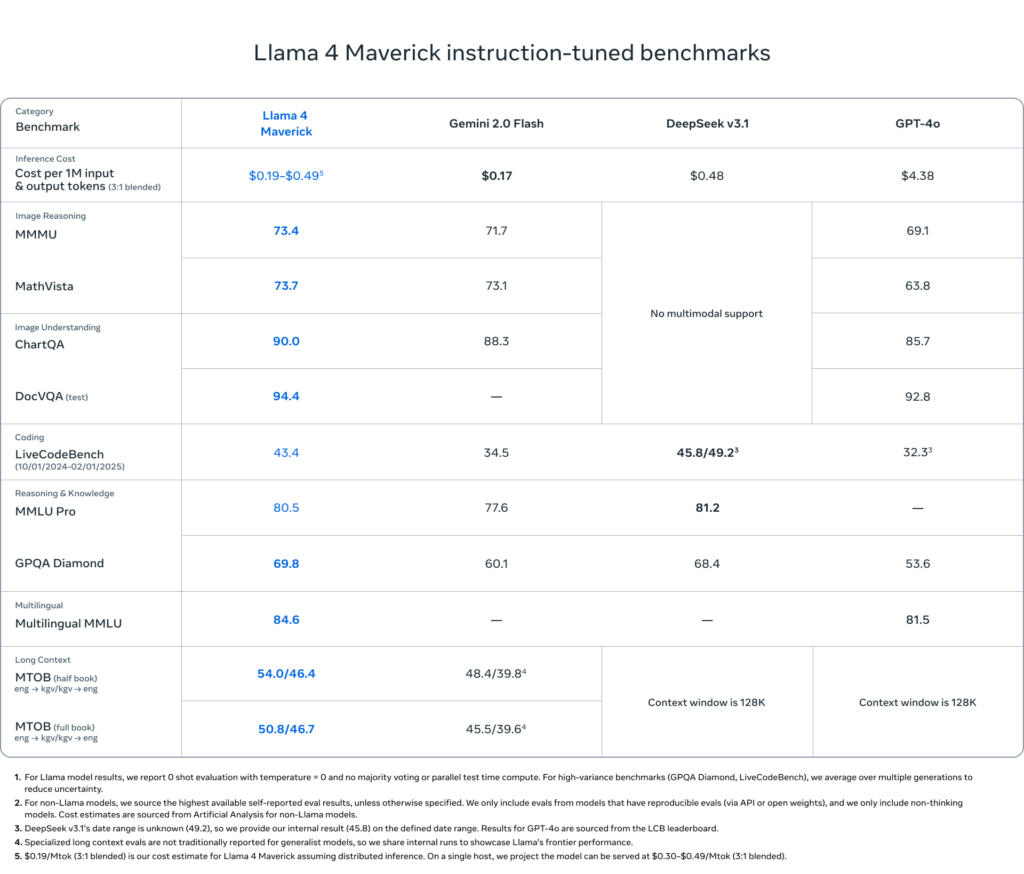

5. Improved Performance Benchmarks

- Outperforms models like Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1 in reasoning, coding, and image-related tasks.

- Llama 4 Maverick competes effectively with models such as GPT-4o and Gemini 2.0 Flash in multimodal and long-context tasks, achieving comparable results with fewer active parameters.

These advancements underscore Llama 4’s enhanced capabilities in handling complex and diverse AI tasks efficiently.

LLama 4 New Models Breakdown: Scout, Maverick & Behemoth

- Llama 4 Scout

Llama 4 Scout is a versatile, developer-friendly model with 17 billion active parameters, 16 experts, and a total of 109 billion parameters. It delivers high-end performance while remaining efficient enough to run on a single NVIDIA H100 GPU.

One of Scout’s standout features is its 10 million-token context window, a significant leap from the 128K tokens in Llama 3. This allows it to handle multi-document summarization, deep codebase analysis, and long-form reasoning tasks without context loss.

Scout is built on the advanced iRoPE (interleaved rotary position embeddings) architecture and inference-time attention scaling, enabling strong generalization over extended sequences. Thanks to its multimodal pretraining on diverse image and video stills, Scout also performs exceptionally well in visual tasks, including image understanding, grounding, and visual question answering.

It’s available openly via Llama.com and Hugging Face, making it deployable across cloud platforms, edge devices, and enterprise-grade environments.

Source: Meta AI

- Llama 4 Maverick

Llama 4 Maverick is a high-performance multimodal language model designed for complex enterprise use cases. It contains 17 billion active parameters, 128 experts, and a total of 400 billion parameters.

Despite its scale, Maverick is optimized to run efficiently on a single H100 GPU, making it highly accessible for businesses with demanding AI workloads.

It excels across coding, reasoning, multilingual tasks, long-context interactions, and image processing. Benchmarks show that Maverick outperforms GPT-4o and Gemini 2.0 Flash, and matches the much larger DeepSeek v3.1 in reasoning and code generation.

Its chat-tuned variant achieved an ELO score of 1417 on LMArena, confirming its quality in real-world conversational AI tasks. Compared to Llama 3.3 70B, Maverick delivers better results at a lower cost, making it a practical option for production.

Source: Meta AI

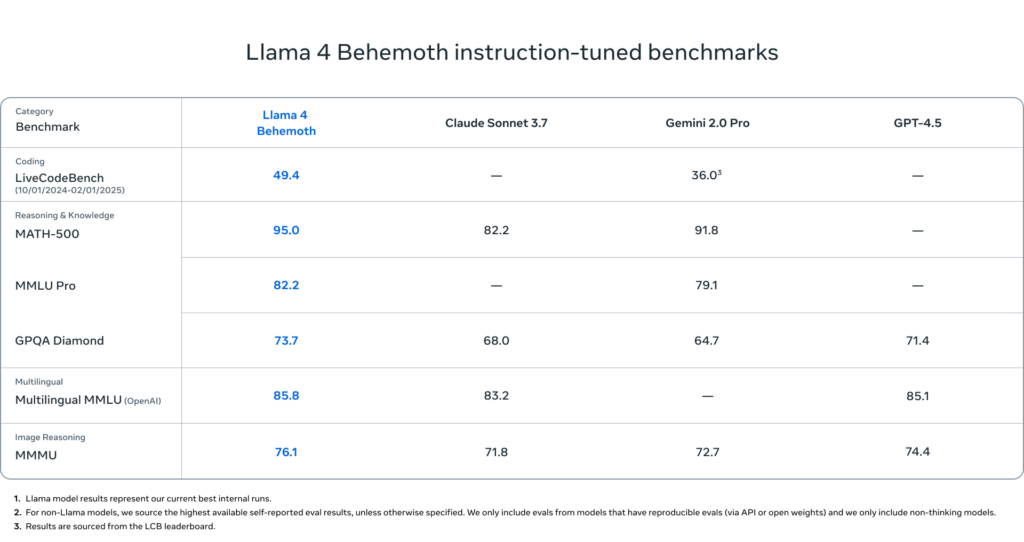

- Llama 4 Behemoth

Llama 4 Behemoth, one of the smartest models yet. Still under training and not publicly released, it’s designed not for direct use, but to train and improve other models like Scout and Maverick using a technique called AI distillation.

Behemoth features 288 billion active parameters, governed by 16 expert systems, and a total parameter size nearing 2 trillion. It’s not just large – it’s foundational.

To support its training, a new infrastructure was built incorporating asynchronous reinforcement learning, prompt difficulty sampling, and a custom loss function to balance the learning curve across tasks.

During post-training, the focus was on performance in complex tasks by removing basic examples and emphasizing difficult prompts. This approach helps Behemoth outperform in advanced reasoning, coding, and multilingual understanding. In the future, it could also be used by enterprises to train custom foundation models, making it a leap forward in next-generation AI development.

Source: Meta AI

Real-World Use Cases of Llama 4 in Business

1. Smarter Customer Support with Multimodal AI

- Image-Based Troubleshooting

Customers can share screenshots or error images, which Llama 4 can interpret to help resolve issues.

- Multilingual Chat Support

Serve users in over 50 languages with responses tailored to the context of each query.

-

Emotion-Aware Interactions

The AI can detect tone and sentiment to handle sensitive cases more thoughtfully or escalate when needed.

2. Boosting Content Creation and Marketing

- Visual-to-Text Generation

Turn product images into social media captions or ad headlines automatically.

- Localized Content Creation

Create region-specific blog posts, product descriptions, and videos in multiple languages.

- Personalized Campaigns

Use customer data and images to generate tailored email marketing content.

3. Automating Document Workflows in Enterprises

- Contract Analysis

Scan legal documents and extract important clauses or risks in seconds.

- Invoice Processing

Understand scanned invoices, even with handwritten notes or low-quality images.

- Research Summarization

Review technical papers and create summaries for quick understanding.

4. Enabling AI in Financial Services

- Fraud Detection

Analyze transaction patterns and document submissions for suspicious behavior.

- Personalized Financial Insights

Convert market data and client profiles into easy-to-understand investment reports.

- Regulatory Monitoring

Ensure all internal communications follow compliance standards.

5. Improving Healthcare with Multimodal AI

- Medical Image Analysis

Cross-reference scans with medical history to assist in diagnosis.

- Clinical Research Support

Quickly analyze large datasets from clinical trials and academic studies.

- Patient Education

Create personalized visuals and summaries of treatment plans.

6. Optimizing Manufacturing and Logistics

- Visual Inspection

Identify defects in products by analyzing images from the production line.

- Training in Multiple Languages

Automatically generate instruction manuals in different languages for global teams.

-

Predictive Maintenance

Use data from sensors and technician notes to detect early signs of equipment failure.

Predictive AI reduces downtime, improves product quality, and enhances workforce training.

Why Use Llama 4?

Llama 4 offers a powerful combination of openness, scalability, and adaptability – making it one of the most practical AI models for enterprise use in 2025. Below are five key reasons why businesses across industries are choosing Llama 4 for their AI-powered applications and multimodal AI solutions.

Llama 4 can be deployed flexibly – either in the cloud for scalability or on-premises for maximum data control. This means organizations can optimize for performance, compliance, or cost-efficiency, depending on their IT and security requirements. It’s a deployment strategy designed around your business, not someone else’s infrastructure.

How We Can Access Llama 4 for Business?

Additionally, organizations can evaluate Llama 4’s capabilities in real-time via its integration in platforms such as WhatsApp, Messenger, Instagram Direct, and through a web-based interface. This dual access – via downloadable models and live demos offers flexibility for both technical teams and decision-makers exploring AI model deployment for business use.

Conclusion

Whether you’re optimizing content operations, improving customer support, or scaling intelligent systems securely, Llama 4 offers a foundation that’s powerful, practical, and built for real-world use. It’s time for forward-thinking businesses to explore how this next-generation model can unlock smarter, faster, and more adaptive solutions.

Frequently Asked Questions

What is Llama 4 and how is it different from previous versions?

Llama 4 is Meta’s most advanced open-source AI model, designed for enterprise-scale use with multimodal input capabilities. Unlike Llama 3, it processes both text and images, offers larger context windows (up to 10 million tokens), and uses a Mixture-of-Experts (MoE) architecture for efficient performance.

How can businesses use Llama 4 for AI-powered workflows?

Businesses can use Llama 4 to automate customer support, generate content, analyze legal documents, detect fraud, and power multilingual chatbots. Its multimodal AI handles images and text, making it ideal for complex, real-world enterprise applications.

What are the main differences between Llama Scout, Maverick, and Behemoth?

- Scout: Lightweight, single-GPU model with 10M token context – great for developers and startups.

- Maverick: High-performance, enterprise-grade multimodal model with 1M token context.

- Behemoth: Not publicly released; used to train other models via AI distillation and advanced research.

Is Llama 4 available for commercial use?

Yes, Llama 4 is open-source and licensed for commercial use. Businesses can download, fine-tune, and deploy it without vendor lock-in – ideal for data-sensitive and regulated industries.

How do I access Llama 4 for my business applications?

What are the key enterprise benefits of Llama 4?

Llama 4 offers multimodal intelligence (text + image), extended token memory, open access, fine-tuning flexibility, and scalable deployment options. This makes it ideal for businesses looking to build AI tools with full control and adaptability.

Why choose Llama 4 over proprietary AI models like GPT-4 or Gemini?

Llama 4 provides enterprise-grade performance without vendor dependency. It’s open-source, cost-effective, customizable, and supports longer context and multimodal input – making it a more transparent and flexible choice for businesses.